At Aliz, we specialize in Google Cloud Platform (GCP) solutions. Our team participates in the development of a successful document management platform called AODocs, a truly cloud-native solution. We’d like to show you how we leverage certain GCP services during the development and operations of this application.

Using a banana for scale, AODocs has about 5 million installed users on the G Suite Marketplace, storing 25TB of data in a NoSQL database (without the indexes), storing 500TB data in BigQuery, serving about 80 million requests per day with 100-150 instances, and running 24/7 for more than 8 years.

AODocs runs on Google App Engine, a Platform-as-a-Service, serverless solution on GCP, where we basically deploy WAR files to servlet containers. All the services we use provide scale-independent performance: a NoSQL database, full-text search, task queues, etc.

To have some data of the charts, we’ve created a basic anonymous tic-tac-toe game that randomly assigns connected players to each other. You can also play with yourself by opening up another browser profile or an incognito window. We invite you to play a game and you’ll see your game in the dashboards linked at the end. It’s fun!

Stackdriver is the central place where you can monitor your application’s health. It gives you a broad range of out-of-the-box metrics for most GCP services. You can easily set up graphs and alerts for the following:

Checking a couple of boards made with these graphs is part of our daily routine. They can be set up with literally a few clicks and they bring great value by allowing us to spot errors or strange behavior, in near real-time.

As you would expect, you can access your application logs. Logs from nearly all GCP services are available on a central log monitoring page where you can easily browse the logs you need. Logs are grouped by request, so you can follow what a single user operation logged. You don’t have to bother with thread IDs or client IPs.

Logs are available on this console for a configured amount of time. You pay for the size of the logs stored. Typically a few weeks is enough. You can configure to push old logs to Cloud Storage, to BigQuery, or to your custom storage through Pub/Sub.

Log-based metrics bring a powerful feature to your system.

Let’s assume you have a conditional branch in your code where you would normally just comment ‘// this should never happen’. At these places, you can rather put ‘log.warn(“This shouldn’t have happened”)` and set up a log-based metric to monitor how many times it actually happens 🙂

In our game example, I added a log line where a game was aborted so I can easily monitor the number of games aborted over time.

You can put graphs of these metrics on Stackdriver dashboards, and you can configure custom alerts based on these metrics. In our example, I can be notified if game abortions become too frequent, which might mean that there’s some application error that prevents proper user matching.

Error reporting is also based on logs, but it specifically looks for error-level messages with a stacktrace. It groups the occurrences automatically based on the stacktrace as if it automatically created a log-based metric and alert for each kind of exception in our application.

Checking error reporting regularly is part of our application operation routine during our work with GCP. This allowed us to catch many errors before they badly affected customers. We constantly check this page during version rollouts, including our staging environment.

It’s amazing how the most subtle race-conditions tend to cause problems quite regularly in large distributed systems.

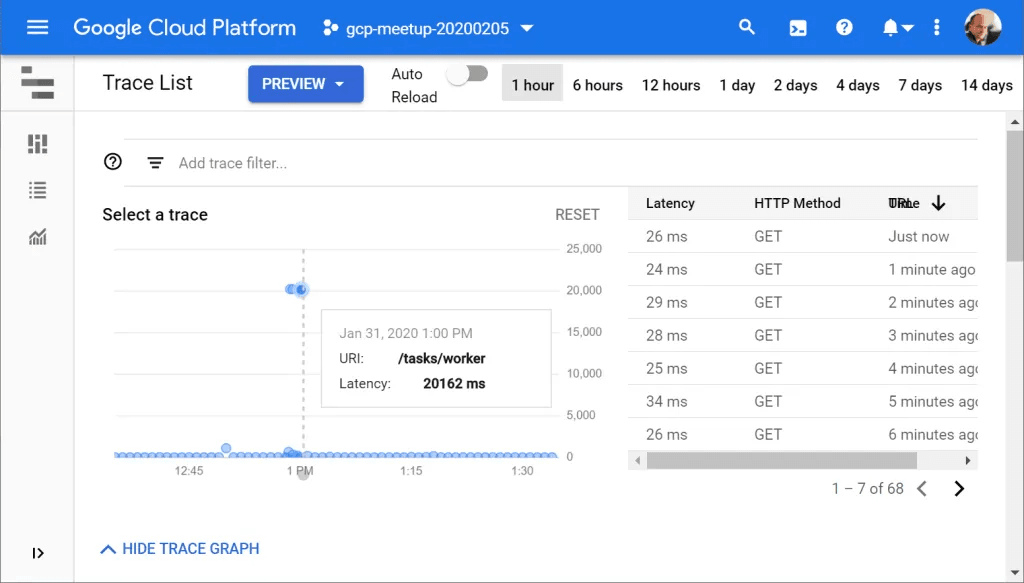

Log traces are automatically recorded for a certain ratio of the application requests. They give an overview of what happened in the request and how long each operation took – Datastore access, external API calls.

This can give powerful insights for performance optimizations.

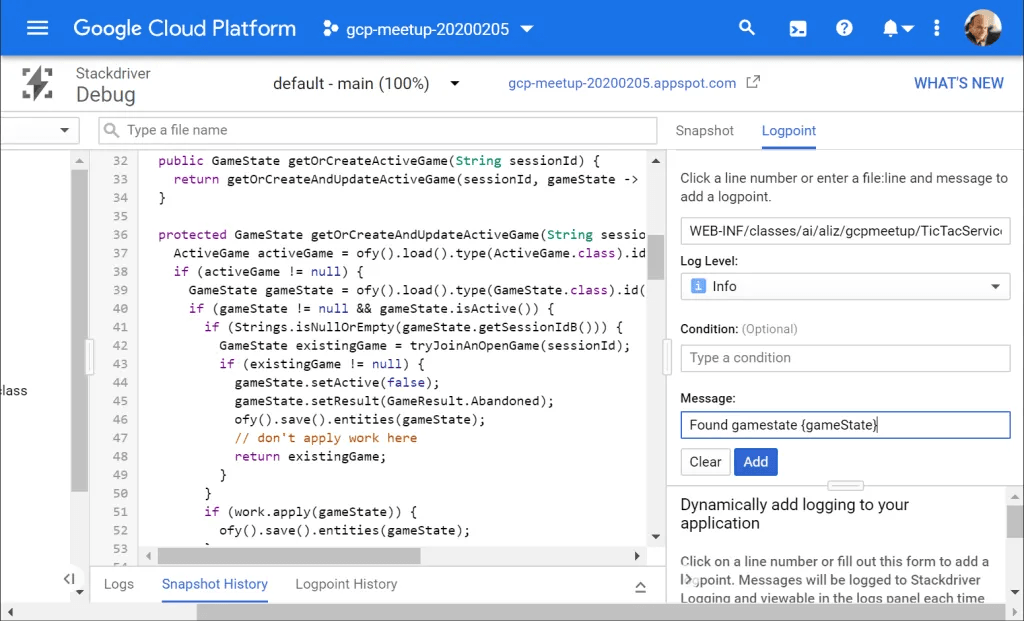

Cloud debugger can be extremely powerful and can be safely used even in a production environment. Don’t worry, breakpoints won’t suspend actual requests; it just takes a quick snapshot.

Considering a kind of request that takes a strange path of execution, you might be wondering what the values of certain variables are during the execution. With cloud debugger, you can instrument the application on-the-fly to:

You can also make these ‘breakpoints’ conditional.

Using the cloud debugger, of course, assumes that the error re-occurs from time-to-time, so you can’t use it to investigate a past incident, but if the error is reproduced, you can very efficiently check the exact conditions.

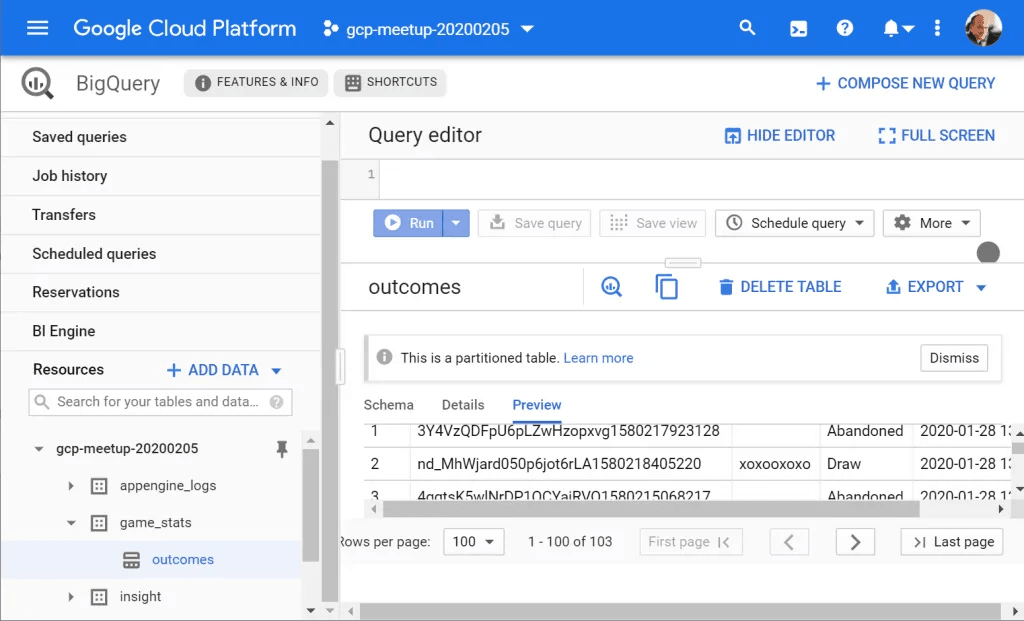

I can’t emphasize enough how powerful it is to have data about your application. All the application logs and metrics are also easily available in BigQuery, a practically bottomless storage for anything you’ll need.

You can easily answer questions about

When working on GCP, you get BigQuery and Data Studio out-of-the-box, ready-to-use. You just pay for the data volume stored and the volume your queries read. There are certainly more sophisticated BI tools out there (which you should also be able to integrate if you prefer), but the tools you get included in GCP are already pretty powerful.

You can automatically stream GCP logs to BigQuery to make them available for ad hoc queries or reports.

You can also rely on BigQuery to collect custom data points from your application. In AODocs, we collect various custom diagnostic data points to get a more exact view of the usage of certain features. We also collect more detailed data about the deferred tasks we execute in task queues. In our game example, we can easily collect historical data about all the game outcomes.

This is a good mechanism to separate historical data from transactional data in a database. Just as you don’t store your application logs in the same database where your customer data is, the historical data is made accessible for BI reports without overloading your transactional database, whether it’s Cloud Datastore or Cloud SQL.

Data Studio is a more generic BI tool that you can use even outside GCP. In application operation on GCP, we use it to create dashboards based on our custom data from BigQuery, but you can configure various other data sources. These dashboards are similar to the Stackdriver dashboards mentioned earlier, but with Data Studio we can use our own queries, custom data dimensions, filtering and drill-down options.

Check out the report we’ve created for the tic-tac-toe game.